Web Scrape Beautifulsoup

BeautifulSoup is a Python library used for parsing documents (i.e. Mostly HTML or XML files). Using Requests to obtain the HTML of a page and then parsing whichever information you are looking for with BeautifulSoup from the raw HTML is the quasi-standard web scraping „stack“ commonly used by Python programmers for easy-ish tasks. For all sort of methods used during any analysis, the complex it can be, they have data as common ingredient. On this post I will introduce a simplistic approach for web-scraping tasks, BeautifulSoup. Steps from this reading. Implementing steps to Scrape Google Search results using BeautifulSoup. We will be implementing BeautifulSoup to scrape Google Search results here. BeautifulSoup is a Python library that enables us to crawl through the website and scrape the XML and HTML documents, webpages, etc.

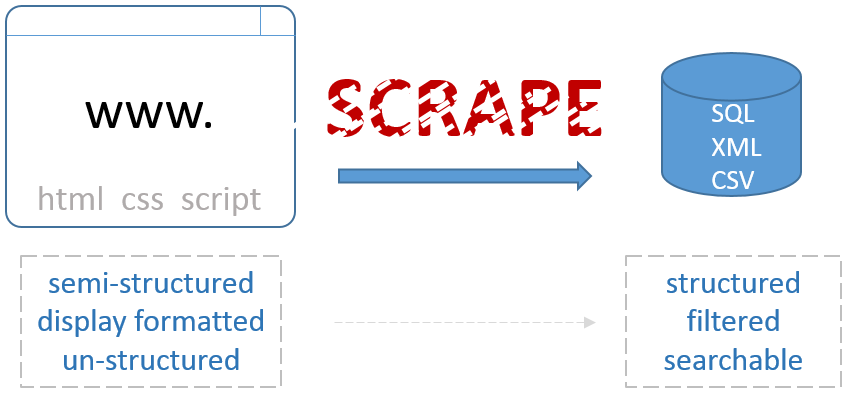

Web scraping is a common technique used to fetch data from the internet for different types of applications. With the almost limitless data available online, software developers have created many tools to make it possible to compile information efficiently. During the process of web scraping, a computer program sends a request to a website on the internet. An Html document is sent back as a response to the program’s request. Inside of that document is information you may be interested in for one purpose or another. In order to access this data quickly, the step of parsing comes into play. By parsing the document, we can isolate and focus on the specific data points we are interested in. Common Python libraries for helping with this technique are Beautiful Soup, lxml, and Requests. In this tutorial, we’ll put these tools to work to learn how to implement Web Scraping using Python.

Install Web Scraping Code

To follow along run these three commands from the terminal. It’s also recommended to make use of a virtual environment to kepp things clean on your system.

- pip install lxml

- pip install requests

- pip install beautifulsoup4

Web Scraping With Python Beautifulsoup

Find A Website To Scrape

To learn about how to do web scraping, we can test out a website called http://quotes.toscrape.com/ which looks like it was made for just this purpose.

From this website, maybe we would like to create a data store of all the authors, tags, and quotes from the page. How could that be done? Well, first we can look at the source of the page. This is the data that is actually returned when a request is sent to the website. So in the Firefox web browser, we can right-click on the page and choose “view page source”.

This will display the raw Html markup on the page. It is shown here for reference.

As you can see from the above markup, there is a lot of data that kind of just looks all mashed together. The purpose of web scraping is to be able to access just the parts of the web page that we are interested in. Many software developers will employ regular expressions for this task, and that is definitely a viable option. The Python Beautiful Soup library is a much more user-friendly way to extract the information we want.

Building The Scraping Script

In PyCharm, we can add a new file that will hold the Python code to scrape our page.

scraper.py

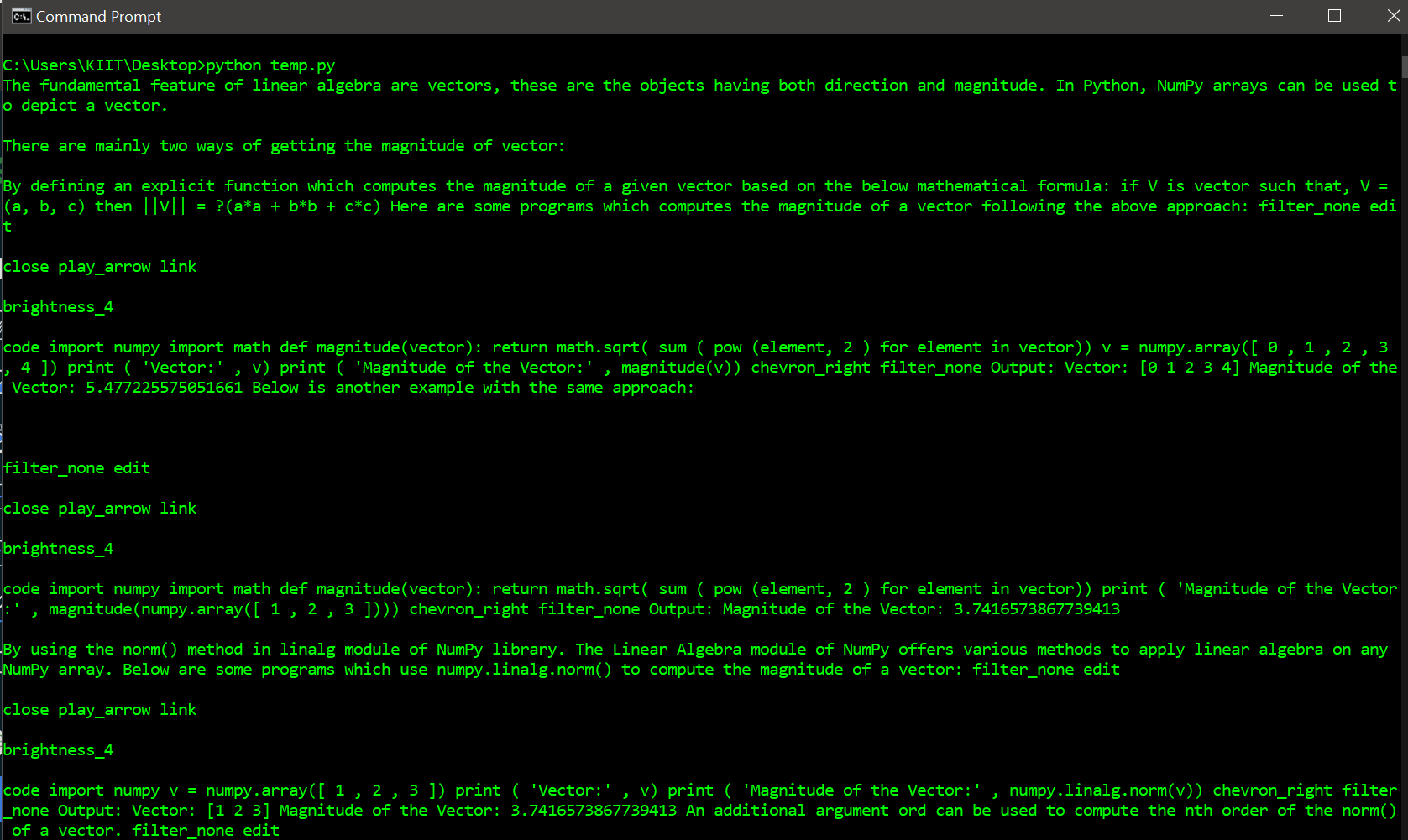

The code above is the beginning of our Python scraping script. At the top of the file, the first thing to do is import the requests and BeautifulSoup libraries. Then, we set the URL we want to scrape right into that url variable. This is then passed to the requests.get() function and we assign the result into the response variable. We use the BeautifulSoup() constructor to put the response text into the soup variable setting lxml as the format. Last, we print out the soup variable and you should see something similar to the screen shot below. Essentially, the software is visiting the website, reading the data and viewing the source of the website much as we did manually above. The only difference is this time around, all we had to do was click a button to see the output. Pretty neat!

Traversing HTML Structures

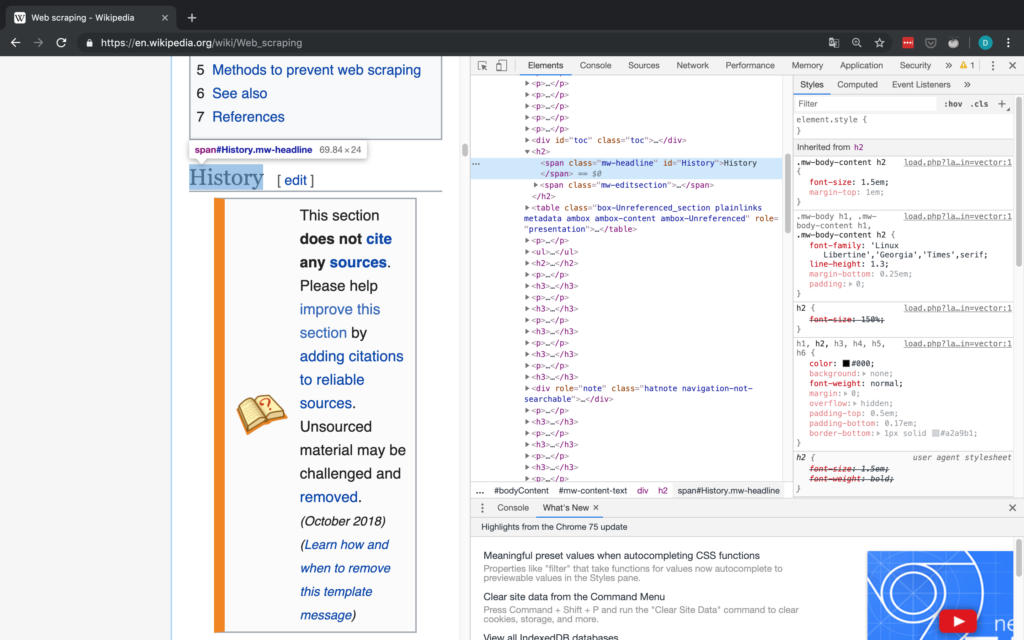

HTML stands for hypertext markup language and works by distributing elements of the HTML document with specific tags. HTML has many different tags but a general layout involves three basic ones. An HTML tag, a head tag, and a body tag. These tags organize the HTML document. In our case, we’ll be mostly focused on the information within the body tag. At this point, our script is able to fetch the Html markup from our designated Url. The next step is to focus on the specific data we are interested in. Notice that if you use the inspector tool in your browser, it is fairly easy to see exactly what Html markup is responsible for rendering a given piece of information on the page. As we hover our mouse pointer over a particular span tag, we can see the associated text is automatically highlighted in the browser window. It turns out that every quote is inside of a span tag which also has a class of text. This is how you decipher how to scrape data. You look for patterns on the page and then create code that works on that pattern. Have a play around and notice that this works no matter where you place the mouse pointer. We can see the mapping of a specific quote to specific Html markup. Web scraping makes it possible to easily fetch all similar sections of an Html document. That’s pretty much all the HTML we need to know to scrape simple websites.

Parsing Html Markup

There is a lot of information in the Html document, but Beautiful Soup makes it really easy to find the data we want, sometimes with just one line of code. So let’s go ahead and search all span tags that have a class of text. This should find all the quotes for us. When you want to find multiple of the same tags on the page you can use the find_all() function.

scraper.py

Web Scrape Beautifulsoup Plugin

When the code above runs, the quotes variable gets assigned a list of all the elements from the Html document that is a span tag with a class of text. Printing out that quotes variable gives us the output we see below. The entire Html tag is captured along with its inner contents.

Beautiful Soup text property

The extra Html markup that is returned in the script is not really what we are interested in. To get only the data we want, in this case, the actual quotes, we can use the .text property made available to us via Beautiful Soup. Note the new highlighted code here where we use a for loop to iterate over all of the captured data and print out only the contents we want.

scraper.py

This gives us a nice output with just the quotes we are interested in.

Neat! To now find all the authors and also print them out as they are associated with each quote, we can use the code below. By following the same steps as before, we first manually inspect the page we want to scrape. We can see that each author is contained inside of a <small> tag with an author class. So we follow the same format as before with the find_all() function and store the result in that new authors variable. We also need to change up the for loop to make use of the range() function so we can iterate over both the quotes and authors at the same time.

scraper.py

Now we get the quotes and each associated author when the script is run.

Finally, we’ll just add some code to fetch all the tags for each quote as well. This one is a little trickier because we first need to fetch each outer wrapping div of each collection of tags. If we didn’t do this first step, then we could fetch all the tags but we wouldn’t know how to associate them to a quote and author pair. Once the outer div is captured, we can drill down further by using the find_all() function again on *that* subset. From there we have to add an inner loop to the first loop to complete the process.

This code now gives us the following result. Pretty cool, right?!

Practice Web Scraping

Another great resource for learning how to Web scrape can be found at https://scrapingclub.com. There are many tutorials there that cover how to use another Python web scraping software package called Scrapy. In addition to that are several practice web pages for scraping that we can utilize. We can start with this url here https://scrapingclub.com/exercise/list_basic/?page=1

We want to simply extract the item name and price from each entry and display it as a list. So step one is to examine the source of the page to determine how we can search on the Html. It looks like we have some Bootstrap classes we can search on among other things.

With this knowledge, here is our Python script for this scrape.

Web Scraping More Than One Page

The URL above is a single page of a paginated collection. We can see that by the page=1 in the URL. We can also set up a Beautiful Soup script to scrape more than one page at a time. Here is a script that scrapes all of the linked pages from the original page. Once all those URLs are captured, the script can issue a request to each individual page and parse out the results.

scraper.py

Running that script then scrapes all the pages in one go and outputs a large list like so.

Learn More About Beautiful Soup

Web Scrape Beautifulsoup Free

Web Scraper Beautiful Soup Tutorial Python 3

- Beautiful Soup Web Scraper Python (realpython.com)

- Python And Beautifulsoup Web Scraping Tutorial (medium.com)

- Implementing Web Scraping In Python With Beautifulsoup (tutorialspoint.com)

- Step By Step Tutorial Web Scraping Wikipedia With Beautifulsoup (towardsdatascience.com)

- Python Beautiful Soup Web Scraping Script (letslearnabout.net)

- Scraping Amazon Product Information With Python And Beautifulsoup (hackernoon.com)

- Quick Web Scraping With Python Beautiful Soup (levelup.gitconnected.com)

- Webscraping With Python Beautiful Soup And Urllib3 (dzone.com)

- Web Scraping Tutorial Python (dataquest.io)

- Python Tutorial Beautiful Soup (tutorials.datasciencedojo.com)

- Python Beautifulsoup (zetcode.com)

- Python On The Web Beautifulsoup (pythonforbeginners.com)

- How To Scrape Web Pages With Beautiful Soup And Python 3 (digitalocean.com)

Web Scraping Beautifulsoup Javascript

Python Web Scraping With Beautiful Soup Summary

Web Scrape Beautifulsoup Login

Beautiful Soup is one of a few available libraries built for Web Scraping using Python. It is very easy to get started with Beautiful Soup as we saw in this tutorial. Web scraping scripts can be used to gather and compile data from the internet for various types of data analysis projects, or whatever else your imagination comes up with.